Fruit Classification

Photo by TechVidvian

Photo by TechVidvianDISCLAIMER:- This project was my first toy project in deep learning, so don’t expect much if you are already an expert.

Technologies used:- Matlab, Matlab Deep Learning Toolbox, AWS EC2 (for training, unfortunately, colab was not there then)

Objective

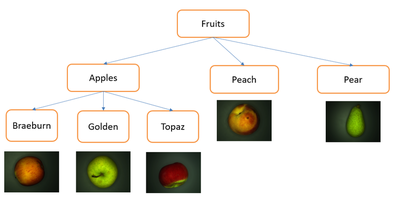

Classification of apple lookalike fruits namely Braeburn apple, golden apple, topaz apple, pear, peach. The fruits have many similarities such as color, shape, pattern, etc. Using neural networks, we want to enhance the performance and increase the accuracy of the algorithm.

Challenges

Using traditional image processing algorithms or feature extraction + traditional ML will be very difficult to classify these fruits because of their similarities.

Details and glimpse of Dataset

A total of 2150 images are organised into five different folders as below.

| Classes | No of Images |

|---|---|

| Braeburn apple | 450 images |

| Golden apple | 420 images |

| Topaz apple | 400 images |

| Pear | 450 images |

| Peach | 430 images |

The different types of fruits are as below,

Sample images

Sample images of each class are shown below for reference.

| Fruit names | Image Sample 1 | Image Sample 2 |

|---|---|---|

| Braeburn apple |  |  |

| Golden apple |  |  |

| Topaz apple |  |  |

| Pear |  |  |

| Peach |  |  |

Data Augmentation

DNNs generally need a large amount of training data to achieve good performance. As mentioned above we are using only 450 images in each class, which is not at all enough. We have relied on image augmentation to do the job for us instead of collecting more data. Image augmentation in our application has been used to create artificial training images through different techniques such as random rotation, shifts, shear, and flips, etc.

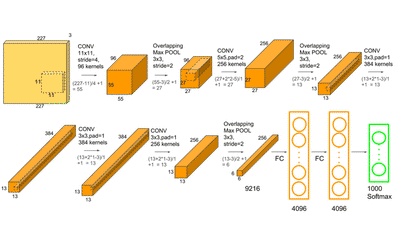

Network Architecture (Good old Alexnet)

AlexNet was primarily designed by Alex Krizhevsky. It was published with Ilya Sutskever and Krizhevsky’s doctoral advisor Geoffrey Hinton, and is a Convolutional Neural Network or CNN.

After competing in ImageNet Large Scale Visual Recognition Challenge, AlexNet shot to fame. It achieved a top-5 error of 15.3%. This was 10.8% lower than that of runner up.

AlexNet Architecture

AlexNet architecture consists of 5 convolutional layers, 3 max-pooling layers, 2 normalization layers, 2 fully connected layers, and 1 softmax layer.

Each convolutional layer consists of convolutional filters and a nonlinear activation function ReLU.

The pooling layers are used to perform max pooling.

Input size is fixed due to the presence of fully connected layers.

The input size is mentioned at most of the places as 224x224x3 but due to some padding which happens it works out to be 227x227x3

AlexNet overall has 60 million parameters.

We have removed the softmax layer and replaced it with 5 class softmax layer with all other weights intact (i.e, transfer learning). The model was trained in an EC2 instance at first and later on a GTX 1050 GPU.